I don’t think it does, but it seems conceivable that it potentially could. Maybe there’s more to intelligence than just information processing - or maybe it’s tied to consciousness itself. I can’t imagine the added ability to have subjective experiences would hurt anyone’s intelligence, at least.

Freedom is the right to tell people what they do not want to hear.

- George Orwell

- 0 Posts

- 77 Comments

Same argument applies for consciousness as well, but I’m talking about general intelligence now.

Same argument applies for consciousness as well, but I’m talking about general intelligence now.

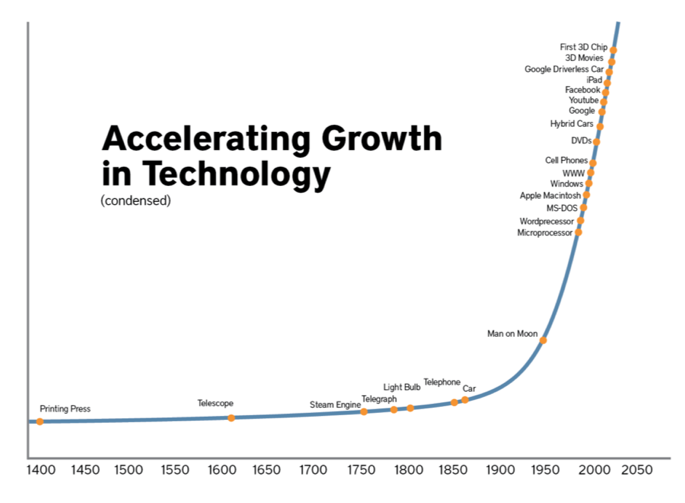

The chart is just for illustration to highlight my point. As I already said - pick a different chart if you prefer, it doesn’t change the argument I’m making.

It took us hundreds of thousands of years to go from stone tools to controlling fire. Ten thousand years to go from rope to fish hook. And then just 60 years to go from flight to space flight.

I’ll happily grant you rapid technological progress even over the past thousand years. My point still stands - that’s yesterday on the timeline I’m talking about.

If you lived 50,000 years ago, you’d see no technological advancement over your entire lifetime. Now, you can’t even predict what technology will look like ten years from now. Never before in human history have we taken such leaps as we have in the past thousand years. Put that on a graph and you’d see a steady line barely sloping upward from the first humans until about a thousand years ago - then a massive spike shooting almost vertically, with no signs of slowing down. And we’re standing right on top of that spike.

Throughout all of human history, the period we’re living in right now is highly unusual - which is why I claim that on this timeline, AGI might as well be here tomorrow.

Not really the same thing. The Tic Tac Toe brute force is just a lookup - every possible state is pre-solved and the program just spits back the stored move. There’s no reasoning or decision-making happening. Atari Chess, on the other hand, couldn’t possibly store all chess positions, so it actually ran a search and evaluated positions on the fly. That’s why it counts as AI: it was computing moves, not just retrieving them.

Trying to claim there was vastly less innovation in the entire 19th century than there was in the past decade is just nonsense.

And where have I made such claim?

The chart is just for illustration purposes to make a point. I don’t see why you need to be such a dick about it. Feel free to reference any other chart that you like better which displays the progress of technological advancements thorough human history - they all look the same; for most of history nothing happened and then everything happened. If you don’t think that this progress has been increasing at explosive speed over the past few hundreds of years then I don’t know what to tell you. People 10k years ago had basically the same technology as people 30k years ago. Now compare that with what has happened even jist during your lifetime.

We’re probably going to find out sooner rather than later.

I can think of only two ways that we don’t reach AGI eventually.

-

General intelligence is substrate dependent, meaning that it’s inherently tied to biological wetware and cannot be replicated in silicon.

-

We destroy ourselves before we get there.

Other than that, we’ll keep incrementally improving our technology and we’ll get there eventually. Might take us 5 years or 200 but it’s coming.

-

That’s just false. The chess opponent on Atari qualifies as AI.

Even my smartphone doesn’t have OLED display.

If I was in the market for a new TV I’d probably go for an OLED assuming image burn-in is no longer an issue with them, but I’ll happily use my 15 year old LED TV for as long as it lasts. I can tell the difference in contrast when side by side with LED/LCD but in normal daily use I don’t pay any attention to it.

304·4 days ago

304·4 days agoI don’t even want 4K. 1080p is more than good enough.

21·9 days ago

21·9 days agoI think comparing an LLM to a brain is a category mistake. LLMs aren’t designed to simulate how the brain works - they’re just statistical engines trained on language. Trying to mimic the human brain is a whole different tradition of AI research.

An LLM gives the kind of answers you’d expect from something that understands - but that doesn’t mean it actually does. The danger is sliding from “it acts like” to “it is.” I’m sure it has some kind of world model and is intelligent to an extent, but I think “understands” is too charitable when we’re talking about an LLM.

And about the idea that “if it’s just statistics, we should be able to see how it works” - I think that’s backwards. The reason it’s so hard to follow is because it’s nothing but raw statistics spread across billions of connections. If it were built on clean, human-readable rules, you could trace them step by step. But with this kind of system, it’s more like staring into noise that just happens to produce meaning when you ask the right question.

I also can’t help laughing a bit at myself for once being the “anti-AI” guy here. Usually I’m the one sticking up for it.

61·10 days ago

61·10 days agoYou’re right - in the NLP field, LLMs are described as doing “language understanding,” and that’s fine as long as we’re clear what that means. They process natural language input and can generate coherent output, which in a technical sense is a kind of understanding.

But that shouldn’t be confused with human-like understanding. LLMs simulate it statistically, without any grounding in meaning, concepts or reference to the world. That’s why earlier GPT models could produce paragraphs of flawless grammar that, once you read closely, were complete nonsense. They looked like understanding, but nothing underneath was actually tied to reality.

So I’d say both are true: LLMs “understand” in the NLP sense, but it’s not the same thing as human understanding. Mixing those two senses of the word is where people start talking past each other.

38·10 days ago

38·10 days agoBut it doesn’t understand - at least not in the sense humans do. When you give it a prompt, it breaks it into tokens, matches those against its training data, and generates the most statistically likely continuation. It doesn’t “know” what it’s saying, it’s just producing the next most probable output. That’s why it often fails at simple tasks like counting letters in a word - it isn’t actually reading and analyzing the word, just predicting text. In that sense it’s simulating understanding, not possessing it.

36·10 days ago

36·10 days agoThis is exactly the use-case for an LLM

I don’t think it is. LLM is language generating tool, not language understanding one.

32·13 days ago

32·13 days agoIt’s not about “AI stonks” really. If one genuinely believes that AGI will be the end of us then any form of retirement savings are just waste of time.

I really think that most investors aren’t as hyped about AI stocks as the anti-AI crowd online wants us to believe. They may have increased the weight of their investments on the tech sector but the vast majority of investors are aware of the risk of not diversifying your portfolio and if you’re someone with actual wealth you can invest then they’re probably not putting it all on Open AI.

The recent drop in AI stocks that was in the news a week or two back doesn’t even register on the value of my portfolio even though nearly all of the top companies on it are tech companies.

91·14 days ago

91·14 days agoBut the title had “AI” in it.

Also to answer your question: https://lemvotes.org/post/programming.dev/post/36238264

12·16 days ago

12·16 days agoLLMs, as the name suggests, are language models - not knowledge machines. Answering questions correctly isn’t what they’re designed to do. The fact that they get anything right isn’t because they “know” things, but because they’ve been trained on a lot of correct information. That’s why they come off as more intelligent than they really are. At the end of the day, they were built to generate natural-sounding language - and that’s all. Just because something can speak doesn’t mean it knows what it’s talking about.

Well, first of all, like I already said, I don’t think there’s substrate dependence on either general intelligence or consciousness, so I’m not going to try to prove there is - it’s not a belief I hold. I’m simply acknowledging the possibility that there might be something more mysterious about the workings of the human mind that we don’t yet understand, so I’m not going to rule it out when I have no way of disproving it.

Secondly, both claims - that consciousness has very little influence on the mind, and that general intelligence isn’t complicated to understand - are incredibly bold statements I strongly disagree with. Especially with consciousness, though in my experience there’s a good chance we’re using that term to mean different things.

To me, consciousness is the fact of subjective experience - that it feels like something to be. That there’s qualia to experience.

I don’t know what’s left of the human mind once you strip away the ability to experience, but I’d argue we’d be unrecognizable without it. It’s what makes us human. It’s where our motivation for everything comes from - the need for social relationships, the need to eat, stay warm, stay healthy, the need to innovate. At its core, it all stems from the desire to feel - or not feel - something.