Interesting piece. The author claims that LLMs like Claude and ChatGPT are mere interfaces for the same kind of algorithms that corporations have been using for decades and that the real “AI Revolution” is that regular people have access to them, where before we did not.

From the article:

Consider what it took to use business intelligence software in 2015. You needed to buy the software, which cost thousands or tens of thousands of dollars. You needed to clean and structure your data. You needed to learn SQL or tableau or whatever visualization tool you were using. You needed to know what questions to ask. The cognitive and financial overhead was high enough that only organizations bothered.

Language models collapsed that overhead to nearly zero. You don’t need to learn a query language. You don’t need to structure your data. You don’t need to know the right technical terms. You just describe what you want in plain English. The interface became conversation.

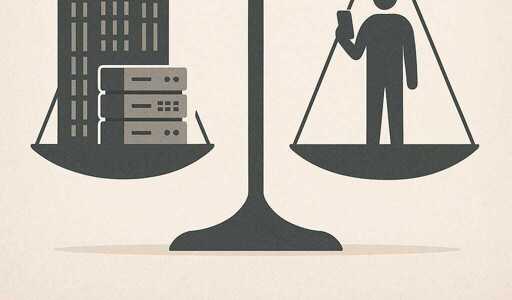

First, I want to ask about these price-tracking websites, do they update in real-time? Do they get their information from confidential data or public data? Do they alert to changes and, in the case of say, applying for an apartment, time the application submission at exactly the right time? Do they collaborate with each other? See, I just learned about algorithmic price fixing and how companies in nearly every industry, every facet of life, give their algorithms access to vast amounts of data, both public and private, and these algorithms share their data with each other, allowing companies to indirectly collude and fix prices without human intervention. What can we common folk do against that?

I’m just saying, you’re mentioning search engines, and the author says

So, how can I, with my spreadsheets and my search engine, possibly stand up to Big AI? David and Goliath was a nice fable, but now Goliath is back, and has friends, and laws protecting them, and all David has, is a sling and a single rock.